You can constraint a pod that can be scheduled on a particular node. Suppose if you want to schedule a pod in node which is attached with SSD. In that case you can schedule a pod to that node manually using the any one of the following strategies

1.Node Selector

2.Node Name

3.taints and tolerations

4.affinity and anti-affinity

1.Node Selector

Here we are going to assign a pod to a particular node using node label. We are going to schedule a pod on node cpu3.

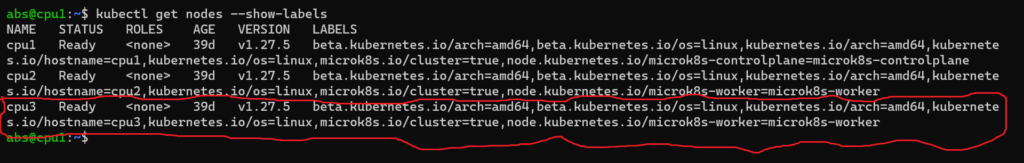

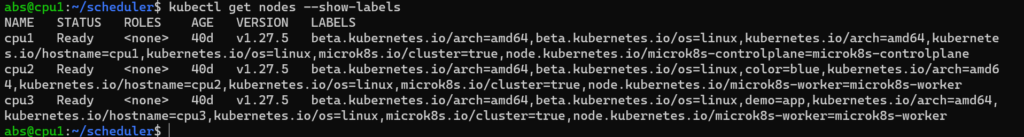

1.List the node along with their labels. Here cpu3 doesn’t having label demo=app.

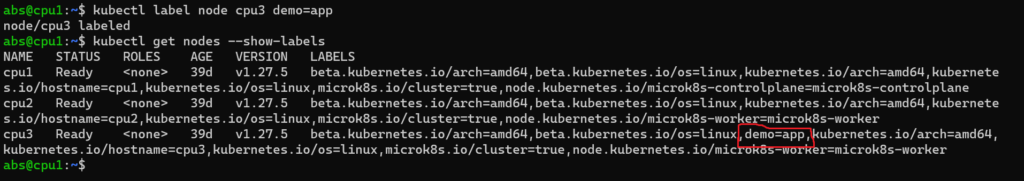

2.Label cpu3 with label demo=app.

Here the node cpu3 is labelled as demo=app.

Next we will place the nginx pod to be scheduled on cpu3.The pod configuration file contains a field called spec.nodeSelector under that you have to give a label of node that you want to schedule on it.

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

demo: app

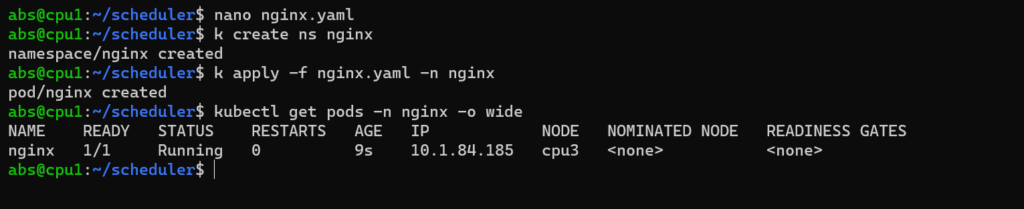

Create a file called nginx and place the above code into it. Run the above code using the following command.

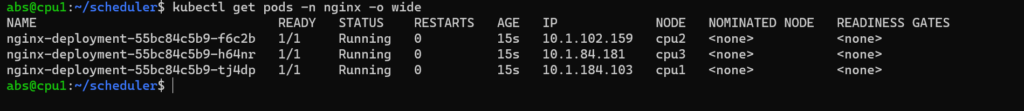

Clearly it is showing that the nginx pod got scheduled into the node cpu3.

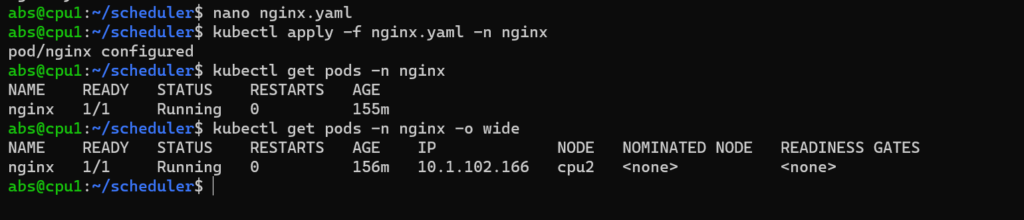

2.Node Name

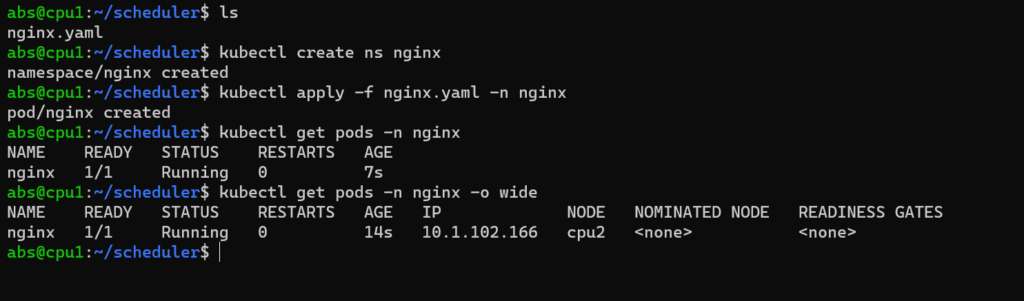

Here we will see how to assign a pod to node using node name.

Here we are going to schedule a pod in node cpu2 using node name .In pod configuration file under the field spec.nodeName we are assigning a nodename.

The nginx pod has been scheduled on cpu2 using nodeName.

3.Taints and Tolerations

Taint is the node property that repel certain pod. Toleration is applied to pod that allows the pod to be scheduled on nodes with matching taints. Let’s see an example of taint and toleration. Normally in our home we are lighting up Mosquito coil so that no mosquito couldn’t enter into home .Mosquito couldn’t tolerate that smell .If it tolerate that smell then it could enter into our home .

The syntax for adding taint to node as follows

kubectl taint nodes node-name key=value:taint-effect

taint-effect as follows

- NoSchedule: Pods will not be scheduled on the node unless they are tolerant. Pods won’t be scheduled, but if it is already running, it won’t kill it. No more new pods are scheduled on this node if it doesn’t match all the taints of this node.

- PreferNoSchedule: Scheduler will prefer not to schedule a pod on taint node but no guarantee. Means Scheduler will try not to place a Pod that does not tolerate the taint on the node, but it is not required.

- NoExecute: As soon as, NoExecute taint is applied to a node all the existing pods will be evicted without matching the toleration from the node.

Here we are applying taint to cpu2 as follows

Kubectl taint nodes cpu2 demo=app:NoSchedule

Tolerations are applied to nginx pod definition file

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

tolerations:

- key: "demo"

operator: "Equal"

value: "app"

effect: "NoSchedule"

But you couldn’t guarantee that pod with toleration is scheduled in tainted node always .It might get scheduled in other node also. When the pod comes to schedule on that node if it tolerate that taint then it will get scheduled.

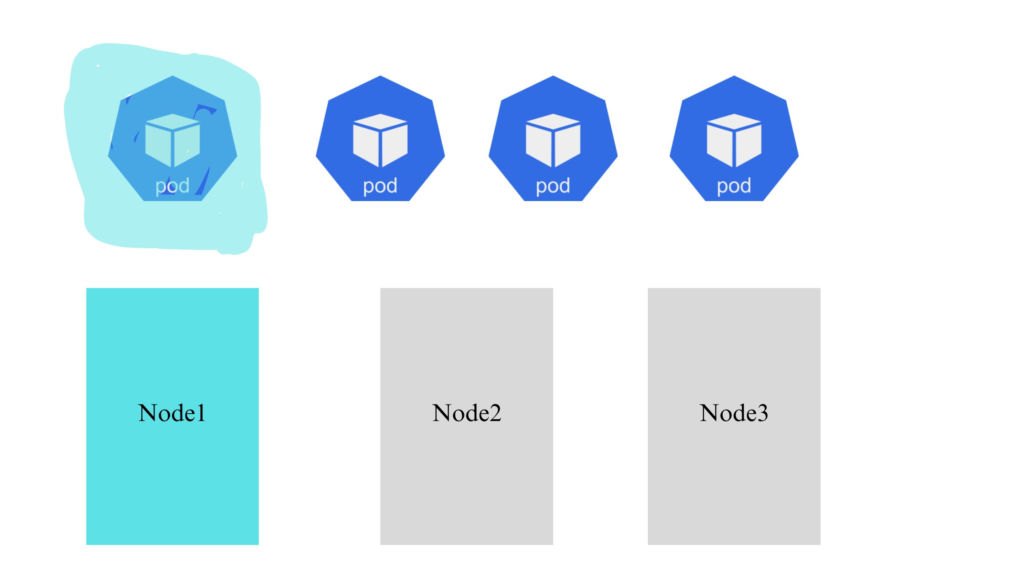

Here Node1 is applied with taint. First pod is applied with tolerations same as taint in node1.

Now we will schedule the pod in nodes.

Here the tainted pod has been scheduled in the tainted nodes. All other pods are scheduled in separate nodes.

4.Affinity and Anti-affinity

Affinity and anti-affinity expands the type of constraints you can define.The affinity features consists of two types of affinity

1.Node Affinity

2.Inter pod affinity/anti-affinity

Node Affinity

Node affinity is conceptually similar to nodeSelector, but you can define more constraints rule on it. Two types of node affinity are as follows

1.requiredDuringSchedulingIgnoredDuringExecution- pod will be scheduled on node only when pod labels are matched with node label. Suppose if the node labels are changed in future the pod will not get evicted

2.preferredDuringSchedulingIgnoredDuringExecuton-pod will be scheduled on node if it doesn’t match with node label.(It is preferred not required. If it matches with node label then it get scheduled on that node. Otherwise it will get schedule on other nodes.)

1.requiredDuringSchedulingIgnoredDuringExecution

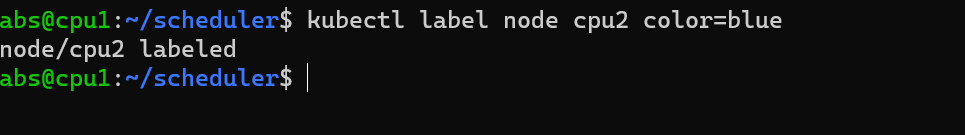

Here we are going to assign a pod to cpu2.So we are labelling cpu2 with label color:blue.

Kubectl label node cpu2 color=blue

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: color

operator: In

values:

- blue

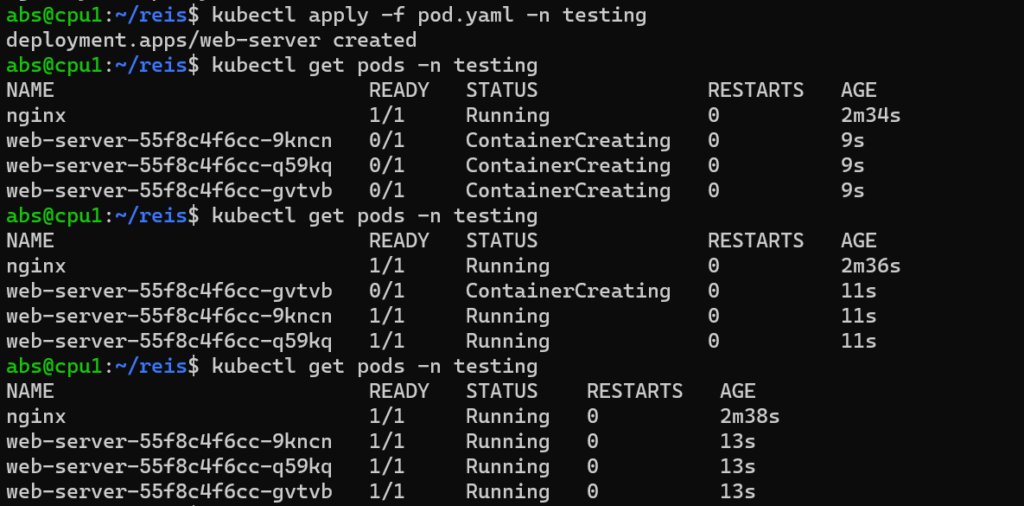

Apply the above code and check whether all the pod got scheduled in cpu2 or not

As per our affinity rule, all the pod of deployment got scheduled in cpu2.

2.preferredDuringSchedulingIgnoredDuringExecution

Here no node is labelled with color:red. We are going to deploy a pod with preferredDuringSchedulingIgnoredDuringExecution affinity rule with label color:red.

We will see whether the pod is scheduled on node or not.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: color

operator: In

values:

- blue

In preferredDuringSchedulingIgnoredDuringExecution will try to schedule the pod based on matching node label. If it found the node then it will schedule the pod on it. Otherwise it will schedule the pod in available nodes.

Inter Pod Affinity and anti-affinity

Inter-pod affinity and anti-affinity allow you to constrain which nodes your Pods can be scheduled on based on the labels of Pods already running on that node, instead of the node labels.

Similar to node affinity inter pod-affinity and anti-affinity having two types as follows

- requiredDuringSchedulingIgnoredDuringExecution

- preferredDuringSchedulingIgnoredDuringExecution

Pod Affinity Example

Pod affinity rule schedule the pod based on previously running pod’s label. It schedule the web-server pod in node which are in same zone and also having running pod with same label as scheduled pod.

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: web-store

replicas: 3

template:

metadata:

labels:

app: web-store

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- web-store

topologyKey: "kubernetes.io/hostname"

containers:

- name: web-app

image: nginx:1.16-alpine

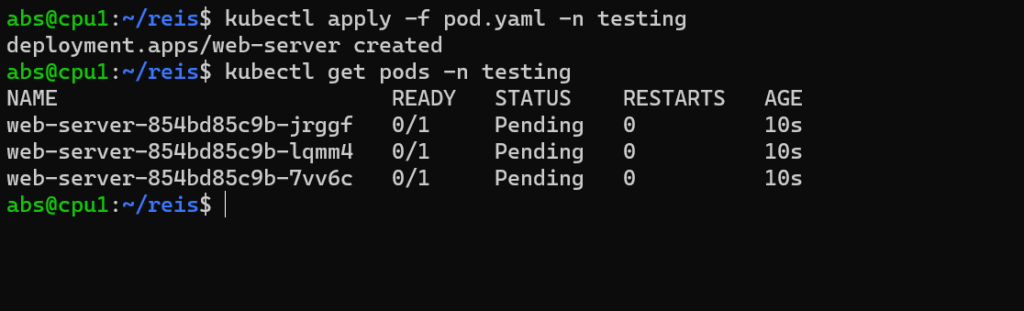

Here we are trying to run the nginx pod using the above Yaml file configuration. It tries to place the pod in node which already having pod with label app:store. Now let’s schedule the pod.

All the pods are in pending state.

So from that we can clearly understood the affinity rule is working fine.

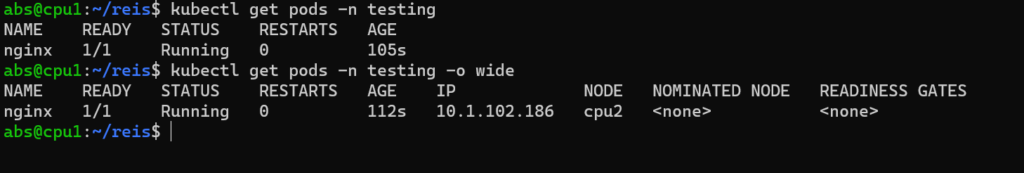

Now schedule one pod in node with label app:web-store and then apply this file.

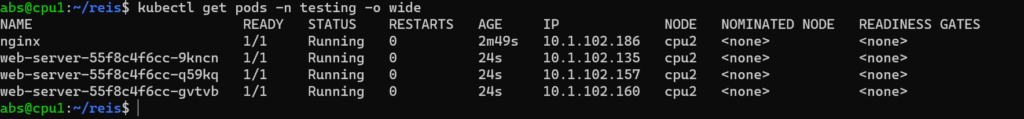

Run the deployment again using the above configuration file and see that it is running /pending

All the pods are scheduled in cpu2 because previously we run one pod with same label.

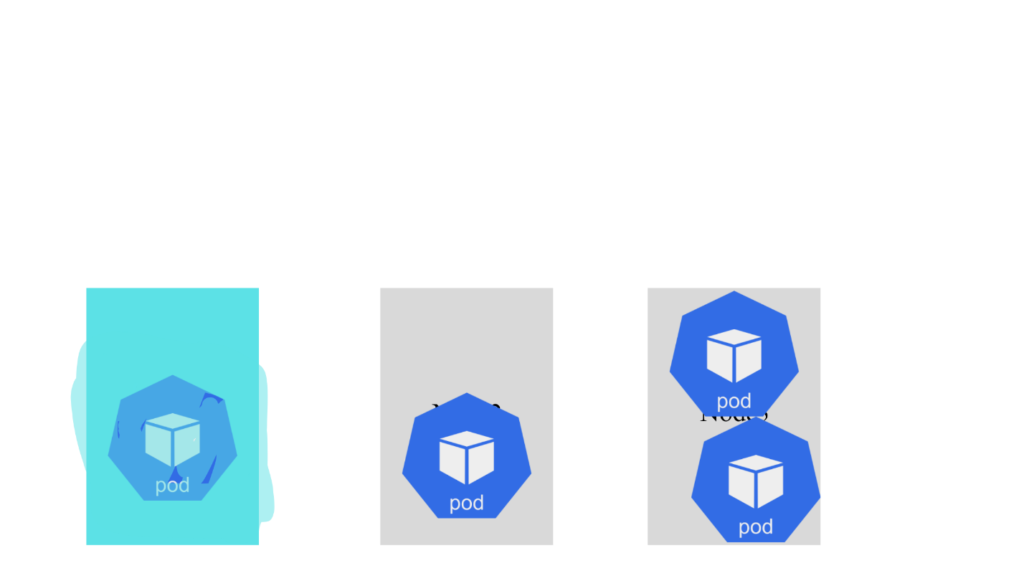

pod anti-affinity

We want to schedule a pod in a node at once. For that purpose we are using anti-affinity rule.let’s schedule the pod using the below configuration file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-cache

spec:

selector:

matchLabels:

app: store

replicas: 3

template:

metadata:

labels:

app: store

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- store

topologyKey: "kubernetes.io/hostname"

containers:

- name: redis-server

image: redis:3.2-alpine